Quantum Computing: When Computers Meet Schrödinger’s Cat

I. Starting with a Nobel Hotspot: Why Quantum Goes "From Microscopic to Engineering"?

The 2025 Nobel Prize in Physics was awarded to John Clark,Michel H. Devoret and John M. Martinis for their breakthroughs in quantummechanics, which laid the foundations for quantum computing. That moment in thespotlight brought quantum science back into the limelight — and naturallyprompted renewed discussions about quantum computing. At the same time, in amajor technical advance, Google announced its new quantum-algorithm “QuantumEchoes”, running on their Willow quantum chip, achieving a verifiable quantumadvantage with about 13,000 × speed over classical supercomputers. With both atheoretical high-point and a practical milestone now online, it’s worth asking:quantum computing and quantum mechanics both carry “quantum” in the name — buthow exactly are they related? Before diving into quantum computing, we firstneed to briefly explain what “quantum” means.

Quantum refers to the odd but powerful rules that governtiny particles like atoms or electrons — imagine electrons appearing as “probabilityclouds”, able to exist in multiple places at once, unlike everyday objects.Quantum computing leverages those rules to tackle problems classical computersstruggle with.

II. A Brief History of Quantum Computing: From Thought Experiments to Multiple Parallel Paths

The development of quantum computing can be seen as a technological long march spanning over 40 years: progressing step by step from bold concepts to initial prototypes. The story dates back to the early 1980s, when physicist Richard Feynman acutely proposed the idea of "simulating computation with quantum systems" in 1981—because classical computers are extremely inefficient at simulating quantum phenomena, it would be better to let "nature solve problems with its own rules." In 1985, David Deutsch further proposed the theoretical framework of the quantum Turing machine, proving that quantum computers are feasible in principle and have potential advantages for certain problems.

A truly industry-exciting milestone occurred in 1994: Peter Shor published a quantum algorithm that could efficiently factor large integers, meaning future quantum computers are expected to outperform classical computation on mathematical problems (this directly threatened encryption systems like RSA, drawing global attention). Soon after, in 1996, Lov Grover's database search algorithm once again demonstrated the charm of quantum acceleration. These theoretical breakthroughs injected immense confidence and funding into quantum computing.

Subsequently, experimental progress began to catch up: in the late 1990s, scientists achieved simple operations with two quantum bits in laboratories, proving that quantum computing was not just theoretical. In 2011, Canadian startup D-Wave announced a quantum annealing computing device with 128 qubits, touted as the world's first commercial quantum. Entering the 2010s, tech giants and startups competed fiercely in the quantum race: various technological routes such as superconducting qubits and ion traps flourished, and the number of qubits on quantum chips gradually climbed from a dozen to over fifty. In 2019, Google's "Sycamore" processor (53 qubits) completed a specific computational task in 200 seconds, which classical supercomputers would reportedly take thousands of years to accomplish—this event was dubbed "quantum supremacy," marking the first time a quantum computer surpassed classical computation in a specific aspect, although this advantage was limited to specific scenarios and sparked some debate.

Stepping into the 2020s, quantum computing R&D in China, the US, and other countries further accelerated: companies like IBM successively released chips with hundreds of qubits, and China also launched its 72-qubit superconducting quantum computer "Origin Wukong" (which went online in 2024 and is used for fine-tuning billion-parameter AI models), demonstrating continuous progress in quantum computing hardware. Although current quantum computers are still relatively primitive and susceptible to errors, the historical process from nothing to something is remarkable—yesterday's whimsical ideas are gradually growing into prototypes, and quantum computing is accelerating towards reality.

III. Principles at a Glance: Three "Superpowers" and Two "Hard Thresholds"

What is a Qubit?

Imagine a classical computer bit as a coin: it's either heads (0) or tails (1), with no in-between. A qubit, however, is like a spinning coin: before observation, it exists in a "fuzzy" state of both heads and tails simultaneously. This core ability to represent multiple possibilities enables exponential computational acceleration.

Quantum computers are highly anticipated precisely because they leverage three unique "superpowers" inherent in quantum mechanics. Below are simple analogies for each:

- Superposition – The "Doppelgänger" Technique: Like a magician appearing in multiple places at once. A qubit can exist in both 0 and 1 states simultaneously, like a coin spinning in mid-air. With 2 qubits, they represent 4 combinations (00, 01, 10, 11) at once; 5 qubits represent 32. This allows a quantum computer to "split" and explore all paths simultaneously—far faster than classical computers checking routes one by one (e.g., finding the optimal travel itinerary).

- Quantum Entanglement – "Telepathy" Between Particles: Like twins who instantly feel each other's pain, no matter the distance. When two qubits entangle, measuring one instantly determines the other's state—even miles apart. Five entangled qubits represent 32 states simultaneously, enabling quantum computers to simulate complex molecules (like drug compounds) that would take classical computers billions of years.

- Measurement Effect – "Schrödinger's Cat": Like opening a box to check if the cat is alive—before opening, it's both alive and dead (superposition); upon opening, it collapses to one state. Measuring a qubit destroys its superposition, so algorithms delay measurement until the end and run multiple times for accuracy. This ensures reliable probabilistic results.

To utilize these quantum superpowers for computation, researchers construct corresponding quantum circuits. In classical computers, bits perform operations through logic gates like AND, OR, and NOT; similarly, in quantum computers, we apply quantum gates to qubits to manipulate their states. Through a series of quantum gate combinations, quantum algorithms are executed, evolving the input state into the desired output state distribution.

However, it is important to emphasize that quantum computing does not outperform classical computing on all problems. Some tasks are still more efficient for classical computers, and the value of quantum computing lies in solving certain problems that classical computers can barely handle, rather than completely replacing classical computing. Currently known quantum algorithm advantages are mainly concentrated in specific areas, such as integer factorization, database search, and quantum chemistry simulation, and are still far from truly general-purpose super-high-speed computing.

Despite the enticing prospects, truly harnessing the "three superpowers" of quantum computing requires overcoming two "hard thresholds."

- The first is the fragility of quantum states. Qubits are extremely sensitive to their environment; tiny thermal disturbances, electromagnetic noise, and other factors can interfere with quantum states, causing superposition and entanglement to rapidly disappear—a phenomenon known as quantum decoherence. To prevent qubits from "losing their magic," researchers must maintain their operating environment at temperatures near absolute zero, isolating them from vibrations and electromagnetic interference. Even so, the coherence time of current qubits remains very short, and the number of logical gate operations that can be performed is limited, posing a severe challenge to quantum computing.

- The second threshold is error correction and scalability. Because qubits are prone to errors and their states cannot be directly copied, classical error checking and redundancy methods are difficult to apply directly, and quantum computing requires entirely new quantum error correction schemes. Theoretically, we can use quantum error correction codes to represent a "fault-tolerant" logical qubit using multiple physical qubits, but this often requires hundreds or thousands of times the resource overhead, which is extremely difficult for current technological levels. Furthermore, to leverage the advantages of quantum computing, hundreds or thousands of high-quality qubits often need to work together, which places extremely high demands on fabrication processes and control technologies. The development of quantum computers requires not only a breakthrough in "quality" but also a leap in "quantity"—both reducing the error rate of individual qubits and scaling up the integration of more qubits with effective control are indispensable, making each a hard threshold.

IV. From Research to Commercialization

What can quantum computers actually do? The answer evolves in layers. More importantly, they will fundamentally reshape daily life and society—like the Industrial Revolution transformed production from steam engines onward. Below we analyze specific transformations, crypto industry impacts, and energy consumption.

- Short-Term Applications (Noisy Intermediate-Scale Quantum - NISQ): Quantum Simulation: Directly simulate molecular behavior for drug discovery and materials science. Example: Accelerate COVID-19 vaccine design, cutting lab-to-market time from years to months.

- Medium-Term Applications (Mid-Scale Fault-Tolerant Systems): Optimization & Machine Learning: In finance, optimize portfolios by evaluating billions of market scenarios simultaneously (avoiding losses); in logistics, plan global supply chains (reducing carbon emissions 10-20%). Crypto Industry Revolution: Shor's algorithm cracks RSA encryption, forcing global shift to "post-quantum cryptography." Bitcoin and blockchain must upgrade protocols, causing short-term market volatility but enabling unbreakable digital currencies long-term. Industrial Revolution Impact: Like steam engines freed labor, quantum computing automates complex decisions, driving smart manufacturing transformation with trillions in annual value added.

- Long-Term Applications (Large-Scale Fault-Tolerant Systems): Daily Life Transformations:

- Healthcare: Personalized drug design enables breakthrough cancer treatments and targeted therapies, significantly extending healthy lifespans through quantum-accelerated genomics.

- Energy: Optimization of battery materials accelerates the shift to renewable energy sources and reduces reliance on fossil fuels.

- Transportation: Real-time optimization of global flights and autonomous driving routes dramatically reduces urban congestion.

- Finance & Crypto: Creates quantum-resistant blockchain systems for unbreakable digital currencies; dramatically speeds up AI training to enable intelligent smart cities.

- Societal Impact: Like the Industrial Revolution's shift from craft workshops to factories, quantum computing moves society from compute limitations to an era of ubiquitous intelligence—while requiring careful management of workforce transitions and ethical considerations.

V. Industry Landscape: Routes, Cloud, and the "Pick-and-Shovel Sellers"

The current quantum computing industry is advancing along multiple technological routes simultaneously, and the entire ecosystem is being driven by cloud services and supporting industries. Below we detail mainstream routes and leading companies (based on 2025 advancements).

Technological Routes & Leading Companies:

- Superconducting Circuit Route (Scale Leader, Fast Operation):

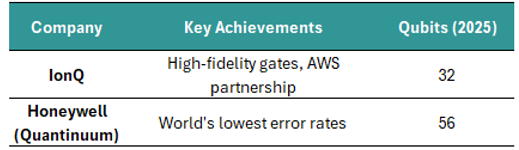

- Ion Trap Route (Highest Precision, Long Coherence):

- Photonic Quantum Route (Room-Temp, Transmission Advantage):

Routes complement each other; no single winner short-term. Cloud Service Leaders: IBM Quantum (first open, 100K+ users), Amazon Braket (multi-route aggregator), Microsoft Azure Quantum (strongest software ecosystem), Google Quantum AI (research-focused).

Besides these, there are some "Pick-and-Shovel" Leaders to follow:

- Bluefors: Global leader in ultra-low temperature refrigerators, supporting ultra-low environment that quantum computing experiments require

- Keysight (Keys US): Precision electronic control equipment for stable qubit operation

VI. Future Opportunities

For investors interested in quantum computing, this is a frontier field full of opportunities, but also accompanied by long-term uncertainties. We suggest following several main lines to grasp the investment directions.

- First, focus on the competition among quantum computing hardware routes: Currently, superconducting, ion trap, and photonic quantum technologies are developing in parallel, each with its own advantages and disadvantages. Investors may want to pay attention to leading companies that master core technologies, such as international giants like IBM and Google in superconducting solutions, and emerging players like IonQ in ion trap solutions, diversifying appropriately to avoid the risk of betting on a single route.

- Second, lay out the quantum computing ecosystem: Early positioning in software algorithms and applications is equally important. Some startups are developing quantum algorithms and software platforms for industries such as finance and pharmaceuticals, and large cloud service providers are also beginning to offer quantum computing cloud access. These "water seller" type companies are expected to gain commercial value even before hardware is fully mature, preparing traditional industries to interface with quantum technology.

- Third, pay attention to opportunities in derivative directions: The rise of quantum computing will force upgrades in cybersecurity, so new investment opportunities may emerge in areas such as post-quantum cryptography algorithms, quantum communication, and quantum metrology, and related technological breakthroughs will enhance the value of the entire quantum industry. Overall, investing in quantum computing requires patience and strategic resolve. It is crucial to closely monitor industry milestone progress (such as leaps in qubit count and error correction capabilities), maintain rationality while planning for the future, and not be swayed by short-term speculation. Only by deeply cultivating along these main lines can one outperform the market in this new quantum computing race and share in the disruptive dividends of the future.