Data center is “Hot” - time to make it “Cool”

Data center is “Hot” - time to make it “Cool”

Walk into a modern data center and the first thing you notice isn't the blinking servers — it’s the sound. The constant hum of fans pushing hot air away from processors and into massive cooling systems has long been the heartbeat of the cloud economy. For years, bigger fans and better airflow engineering were enough to keep servers safe.

Not anymore.

Artificial intelligence has changed the physics of computing. Chips are drawing more power and generating more heat than air can practically remove. That shift is now forcing a once-in-decades infrastructure transition — from air to liquid — and it is creating one of the most overlooked investment opportunities in the AI supply chain.

The Thermal Wall: Why Air Cooling Is Breaking

To understand liquid cooling’s rise, consider the heat profile of AI chips.

• NVIDIA’s V100 GPU (2017) consumed around 300 watts.

• The H100 (2022) jumped to 700W.

• The latest Blackwell GPUs (2024+) reportedly push 1,200W per chip.

• Cloud providers are planning for 2,000W and higher in coming generations.

That’s just per chip. A fully loaded AI rack can now exceed 100 kilowatts, with early training clusters climbing into the 200–300kW range.

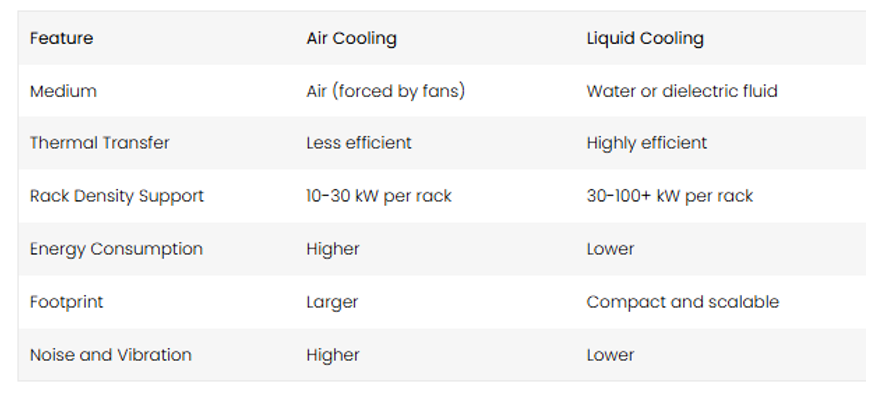

Traditional air systems weren’t built for this. Air has low thermal conductivity, meaning you must move vast quantities of it to remove heat. That requires larger fans, higher airflow, louder rooms, and ultimately bigger buildings to house the equipment. The economics break before the physics do.

Cooling used to be a secondary concern — a rounding error next to compute. Now it is a gating factor. If you can’t remove heat, you can’t deploy GPUs — and in the AI era, idle silicon is unacceptable.

Liquid Cooling: A Simple Physics Upgrade

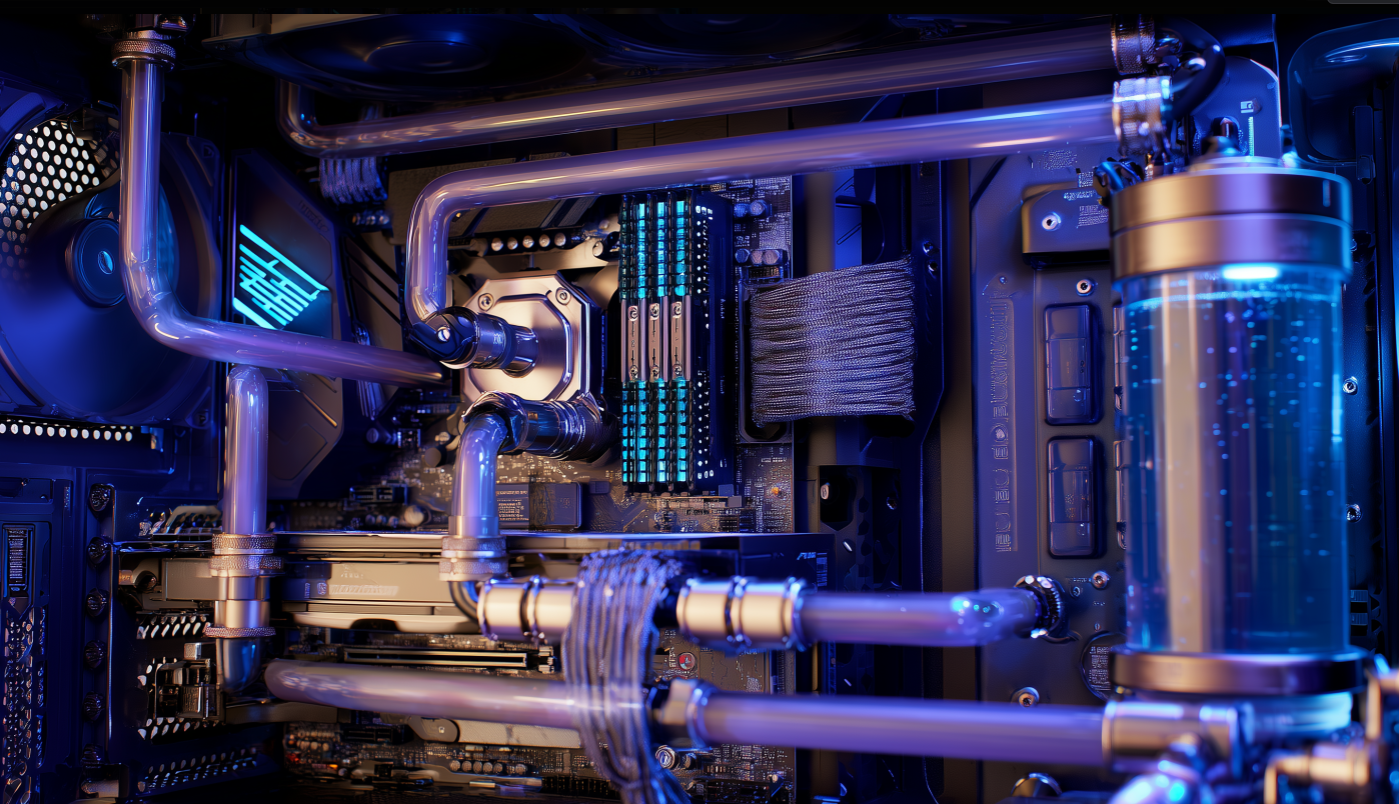

Liquid cooling isn’t just a new tool — it’s a change of medium. Instead of blasting chips with air, coolant is brought directly to the source of heat.

Water and engineered fluids are:

• 20–100x more thermally conductive than air.

• 800x denser, meaning they carry far more heat per unit of volume.

• Capable of supporting rack densities 3–5x higher than air.

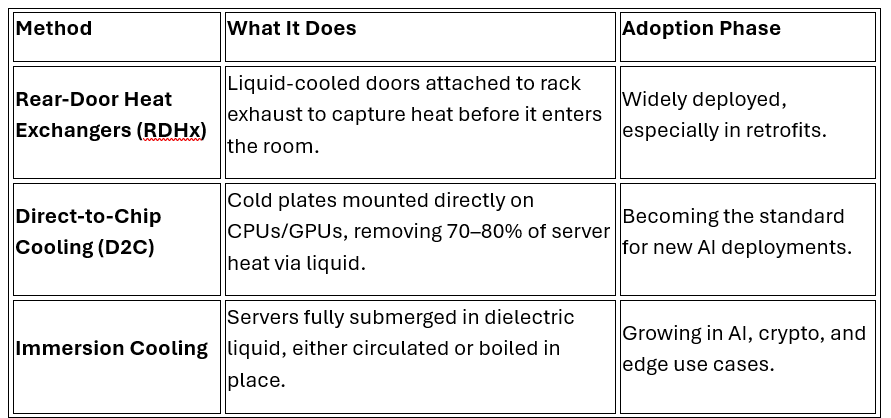

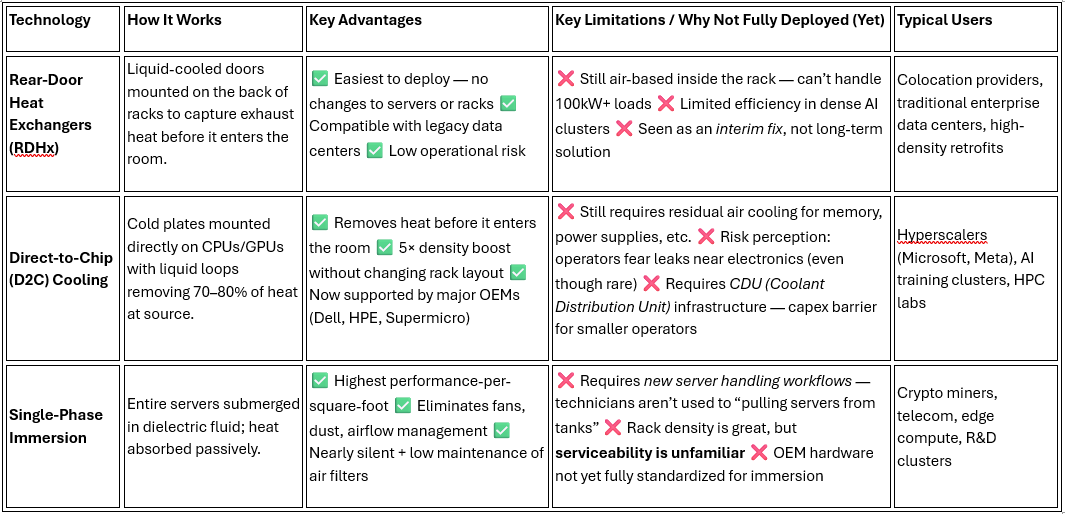

Liquid cooling comes in a few architectures, but the easiest way to think about them is by contact level:

In most facilities, operators don’t need to choose one method. They mix and match. A common hybrid model might pair D2C plates for GPUs with air or RDHx for everything else. This allows gradual adoption without rebuilding the entire facility.

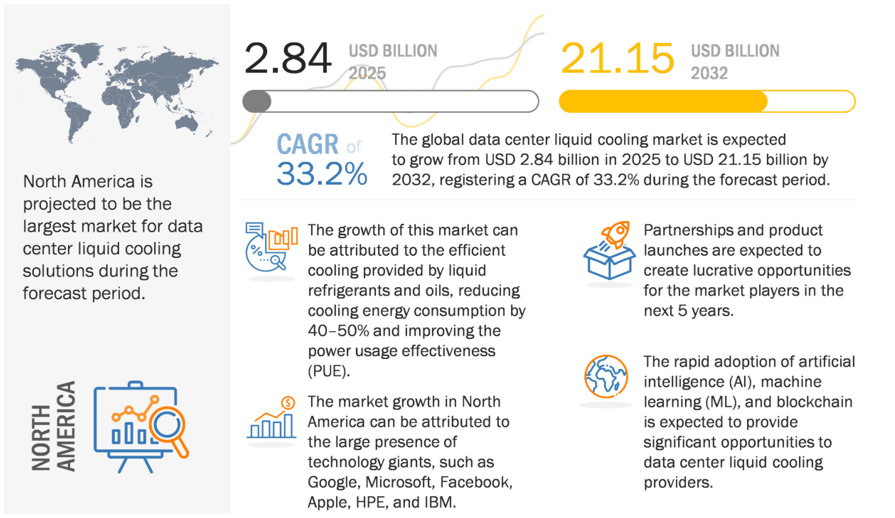

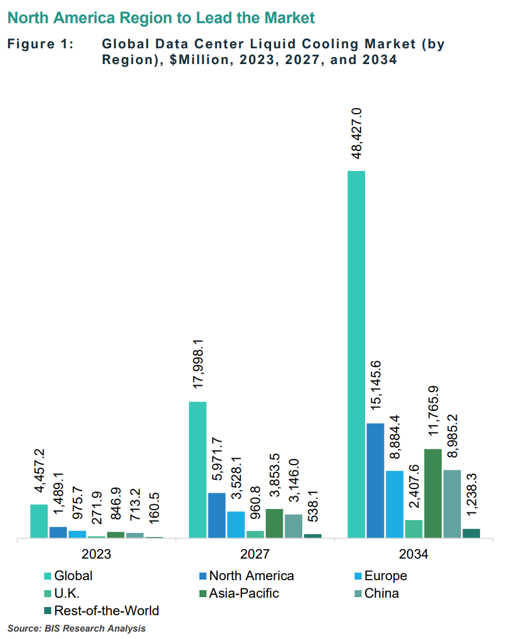

Despite the headlines, liquid cooling is still early in its adoption curve — which is exactly why investors are paying attention. According to Markets and Markets, the global data center liquid cooling market is projected to grow from USD 2.8 billion in 2025 to USD 21.1 billion by 2032, representing an eye-popping CAGR of over 33%.

Who’s Driving Adoption? Follow the Hyperscalers

Liquid cooling isn’t being pushed by vendors — it is being pulled by hyperscalers.

• Microsoft, Google, Meta, and Amazon are actively installing liquid-cooled racks across AI clusters.

• NVIDIA now collaborates directly with cooling partners to ensure deployments can scale thermally.

• Colocation providers are converting suites into “liquid-ready” environments to attract GPU tenants.

• Finance, research, telecom, and high-frequency trading firms are adopting immersion to minimize latency per cubic meter.

Asia and Europe are following, but North America is leading, driven by cloud capex and regulatory pressure around energy efficiency and heat reuse.

Today’s Workhorses vs Tomorrow’s Disruptors

Not all liquid cooling is created equal. The technologies being deployed today are what operators call “deployment-grade thermal tools” — meaning they work with existing server formats and don’t force data centers to rewrite their architecture.

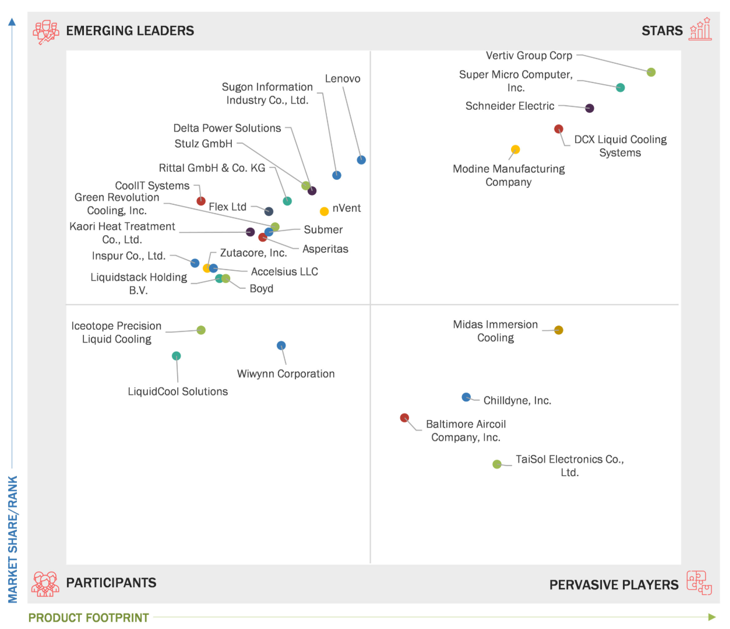

The Investment Case: Why Vertiv (VRT) Is the Cleanest Liquid-Cooling Play

If AI chips are the oil, liquid cooling is the pipeline system that moves and stabilizes the heat. And in that pipeline business, one company stands out: Vertiv (NYSE: VRT).

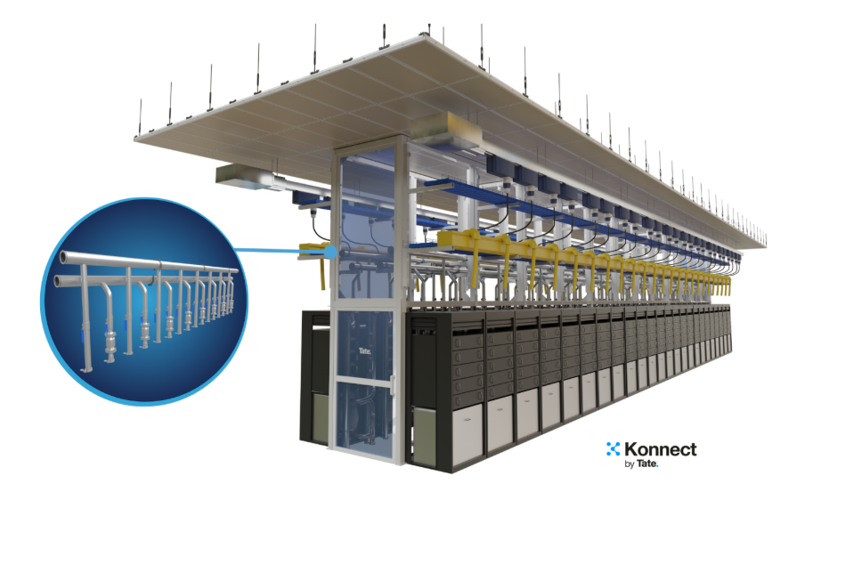

Vertiv makes the infrastructure behind liquid cooling, including:

• Coolant Distribution Units (CDUs): the pumping stations that move liquid between racks and facility systems.

• Rear-Door Heat Exchangers: retrofittable solutions that turn standard racks into liquid-capable units.

• Immersion tanks and modular systems.

• Heat-rejection gear (dry coolers, chillers) designed for warmer liquid return temperatures.

• Full-service integration and maintenance, which is crucial because operators don’t want to piece together a system from random suppliers.

Recent case studies show Vertiv’s immersion systems cutting PUE (power usage effectiveness) from 1.6 to 1.25, halving colocation space, and trimming CO₂ output by over 20% annually. That’s not just an energy story — it’s a revenue-per-square-foot story.

Wall Street is already paying attention. The stock has more than doubled in the past year, but the long runway remains intact. As rack densities rise, Vertiv’s “content per deployment” scales naturally. Each AI rack doesn’t just need GPUs — it needs pumps, manifolds, doors, heat exchangers, controls, and service contracts.

If you believe AI is still in early innings, VRT is a picks-and-shovels play on the thermal bottleneck.

The Next Frontier: Microfluidics and Phase-Change Cooling

While most of today’s deployments rely on circulating water or oil, more advanced methods are coming — and they could shift the landscape again.

Two-phase liquid cooling leverages boiling and condensation inside sealed cold plates. Rather than just warming up, the coolant changes state, absorbing far more heat in the process. This allows higher return temperatures (meaning no chillers in many climates) and lower pumping power.

Even more futuristic is microfluidics, where tiny channels or jet-like streams are etched directly into cooling plates — or eventually into chip substrates themselves. Microsoft and various research partners have actively explored micro-jet cooling, which could one day cool 5,000-watt chips at the die level.

These technologies are early and not yet commercial at hyperscale. But investors should treat them as long-dated options rather than threats. Even if cooling moves closer to the chip, someone still needs to move heat out of the rack and out of the building. That means CDUs, heat rejection, and controls — exactly where Vertiv plays.

Where the Market Goes From Here

Liquid cooling is not a speculative concept. It is already being installed at scale. The shift won’t be instantaneous, but the trend is irreversible. The logic is simple:

• AI chips will keep getting hotter.

• Air will keep getting less economical at scale.

• Liquid will absorb increasing share of new deployments.

The most likely path looks like this:

1. Today: Hybrid rooms — RDHx + D2C + air.

2. Next 5 years: D2C becomes the default for AI-optimized halls.

3. Longer term: Immersion and phase-change approaches expand as costs fall.

4. Eventually: Microfluidic designs bring cooling into the package itself.

Final Thought: Don’t Just Bet on GPUs. Focus on What Keeps Them Alive.

Investors have piled into chipmakers like NVIDIA — and rightly so. But the next leg of AI infrastructure returns may come not from silicon, but from what keeps silicon running at full speed.

In the end, AI performance is not just a compute problem. It is a heat problem.And in solving that heat problem, liquid cooling isn’t just another line item — it’s the foundation layer that makes the entire AI economy physically possible. That’s why Vertiv deserves a place on every AI infrastructure watchlist. When the world goes liquid, you want to own the plumbing.